How to set up Squid as a transparent web proxy on CentOS or RHEL

In a previous tutorial, we have seen the method of creating a gateway using iptables. This tutorial will focus on turning the gateway into a transparent proxy server. A proxy is called "transparent" when clients are not aware that their requests are processed through the proxy.

I originally wrote this tutorial for xmodulo.com

There are several benefits of using a transparent proxy. First of all, for end users, a transparent proxy can enhance their web browsing performance by caching frequently accessed web content, while introducing minimal configuration overhead for them. For administrators, it can be used to enforce various administrative policies such as content/URL/IP filtering, rate limiting, etc.

A proxy server acts as an intermediary between a client and a destination server. The client sends requests to the proxy server which then evaluates the requests and takes necessary actions. In this tutorial, we will be setting up a web proxy server using Squid, which is a robust, customizable and stable proxy server. Personally, I had administered a Squid server with 400+ client workstations for about a year. Although I had to restart the service about once a month in average, CPU and storage utilization, throughput and client response time were all great.

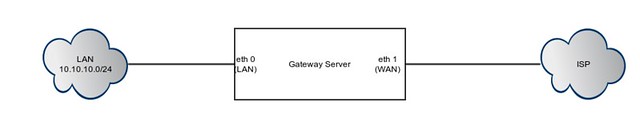

We will be configuring Squid for the following topology. The CentOS/RHEL box has one NIC (eth0) connected to the private LAN, and the other one (eth1) connected to the Internet.

Squid Installation

To set up a transparent proxy with Squid, we start by adding necessary iptables rules. These rules should help you get started, but please make sure that they do not conflict with any of the existing configuration.

# iptables -t nat -A POSTROUTING -o eth1 -j MASQUERADE

# iptables -t nat -A PREROUTING -i eth0 -p tcp --dport 80 -j REDIRECT --to-port 3128

# iptables -t nat -A PREROUTING -i eth0 -p tcp --dport 80 -j REDIRECT --to-port 3128

The first rule will cause all outbound packets from eth1 (WAN interface) to have the source IP address of eth1 (i.e., enable NAT). The second rule will redirect all incoming HTTP packets (destined to TCP 80) from eth0 (LAN interface) to Squid listening ort (TCP 3128), instead of forwarding it out to WAN interface right away.

We start Squid installation by using yum.

# yum install squid

Now we will modify Squid configuration to turn it into a transparent proxy. We define our LAN subnet (e.g., 10.10.10.0/24) as a valid client network. Any traffic not originating from the LAN subnet will be denied access.

# vim /etc/squid/squid.conf

1

2

3

4

5

6

7

8

9

10

11

| visible_hostname proxy.example.tsthttp_port 3128 transparent## Define our network ##acl our_network src 10.10.10.0/24## make sure that our network is allowed ##http_access allow our_network## finally deny everything else ##http_access deny all |

Now we start Squid service and make sure it is added to startup.

# service squid start

# chkconfig squid on

# chkconfig squid on

Now that Squid is up and running, we can test its functionality by monitoring Squid log. Visit any URL from a computer connected to the LAN, and you should see something like the following in the log.

# tailf /var/log/squid/access.log

1402987348.816 1048 10.10.10.10 TCP_MISS/302 752 GET http://www.google.com/ - DIRECT/173.194.39.178 text/html 1402987349.416 445 10.10.10.10 TCP_MISS/302 762 GET http://www.google.com.bd/? - DIRECT/173.194.78.94 text/html

According to the log file, the machine with IP 10.10.10.10 tried to access google.com and Squid processed the request.

The most basic form of Squid proxy server is now ready. In the rest of the tutorial, we will be tuning some parameters of Squid to control outbound traffic. Note that this is for demonstration only. Actual policies should be customized to meet your requirements.

Preliminary

Before starting the configuration, we clarify a few points.

Squid Configuration Parsing

While reading a configuration file, Squid parses the file in a top-down fashion. Rules are parsed top-down until a match is found. Whenever a match is found, that rule is executed, and any other rule below it is ignored. So, the best practice for adding filtering rules is to specify rules in the following sequence.

explicit allow explicit deny allow entire LAN deny all

Squid restart vs. Squid reconfigure

Whenever Squid configuration is modified, Squid service needs to be restarted. Depending on the number of active connections, restarting the service may take a a while, sometimes several minutes. LAN users will not be able to access the Internet during this time. To avoid such service interruption, we can use the following command instead of "service squid restart".

# squid -k reconfigure

This command will allow Squid to run with updated parameters without restarting itself.

Filtering LAN Hosts by IP Address

In this demonstration, we want to set up Squid such that hosts with IP address 10.10.10.24 and 10.10.10.25 are prevented from accessing the Internet. We create a text file 'denied-ip-file' containing the IP addresses of all denied hosts, and add that file in Squid configuration.

# vim /etc/squid/denied-ip-file

10.10.10.24 10.10.10.25

# vim /etc/squid/squid.conf

1

2

3

4

5

6

7

| ## first we create an ACL to isolate the denied IPs ##acl denied-ip-list src "/etc/squid/denied-ip-file"## then we apply the ACL ##http_access deny denied-ip-list ## explicit deny ##http_access allow our_network ## allow LAN ##http_access deny all ## deny all ## |

Now we need to restart Squid service. Squid will no longer honor requests from these IP addresses. If we check the squid log, we will find 'TCP_DENIED' for requests originated from these hosts.

Filtering Websites in a Blacklist

This method will work for HTTP only. Assuming that we want to block badsite.com and denysite.com, we add the addresses to a file and add the reference to squid.conf.

# vim /etc/squid/badsite-file

badsite denysite

# vim /etc/squid/squid.conf

1

2

3

4

5

6

7

8

| ## ACL definition ##acl badsite-list url_regex "/etc/squid/badsite-file"## ACL application ##http_access deny badsite-listhttp_access deny denied-ip-list ## previously set, no effect here ##http_access allow our_networkhttp_access deny all |

Please note that we have used the ACL type 'url_regex', which will match the words 'badsite' and 'denysite' in requested URLs. That is, any request whose URL contains 'badsite' or 'denysite' (e.g., badsite.org, newdenysite.com, otherbadsite.net) will be blocked.

Combining Multiple ACLs

We will create an access list to block clients with IP addresses 10.10.10.200 and 10.10.10.201 from accessing custom-block-site.com. Any other clients would be able to access the site. To do this, we will create an access list to isolate the IP addresses first, and then create another access list to isolate the required website. Finally, we will use both access lists simultaneously to meet the requirement.

# vim /etc/squid/custom-denied-list-file

10.10.10.200 10.10.10.201

# vim /etc/squid/custom-block-website-file

custom-block-site

# vim /etc/squid/squid.conf

1

2

3

4

5

6

7

8

9

| acl custom-denied-list src "/etc/squid/custom-denied-list-file"acl custom-block-site url_regex "/etc/squid/custom-block-website-file"## ACL application ##http_access deny custom-denied-list custom-block-sitehttp_access deny badsite-list ## previously set, no effect here ##http_access deny denied-ip-list ## previously set, no effect here ##http_access allow our_networkhttp_access deny all |

# squid -k reconfigure

The blocked hosts should not be able to access the mentioned site now. The log file /var/log/squid/access.log should contain 'TCP_DENIED' for corresponding requests.

Setting Maximum Download Size

Squid can be used to control the maximum downloadable file size. We want to restrict maximum download size to 50 MB for hosts 10.10.10.200 and 10.10.10.201. We have already created the ACL 'custom-denied-list' previously to isolate the traffic from these sources. Now we will use the same access list to restrict download size.

# vim /etc/squid/squid.conf

1

| reply_body_max_size 50 MB custom-denied-list |

# squid -k reconfigure

Setting up Squid Caching Hierarchy

Squid supports caching by storing frequently accessed files in the local storage. Imagine 100 users within your LAN are accessing google.com. Without caching, the logo or doodle for the page needs to be fetched individually for each request. Squid can store the logo or doodle in its cache to serve them from its cache. This results in improved user perceived performance as well as reduced bandwidth usage. A win-win if you will.

To enable caching, we modify the configuration file squid.conf.

# vim /etc/squid/squid.conf

1

| cache_dir ufs /var/spool/squid 100 16 256 |

The numbers 100, 16 and 256 have the following meaning.

- 100 MB storage is allocated for Squid cache. You may increase the allocated space if you want.

- 16 directories, each containing 256 subdirectories will be used to store cache files. This parameter should not be modified.

We can verify whether Squid cache is enabled from the log file /var/log/squid/access.log. For successful cache hits, we should see entries with 'TCP_HIT'.

To sum up, Squid is a powerful, industry standard web proxy server that is used widely by system admins worldwide. Squid provides easy access control that can be used to administer traffic originating from the LAN. It can be deployed in small companies as well as large enterprise networks. This tutorial covered only a subset of all Squid features. Refer to the official documentation for a complete feature list.

Hope this helps.

I use this web proxy to unblock any website and anonymous surfing Pinproxy.com

ReplyDelete